I bought a stereo system back in 2023. Like an ‘old school’ one, in the sense that it consists of a couple of bookshelf speakers and an amp. I don’t own any physical music media anymore – I left all my CDs behind when I moved to Germany in 2016, and just use Spotify now. So I needed a way to get Spotify on the stereo.

I am a big sucker for the brushed metal and the slightly overdone retro aesthetic

Now – normal people would do something like buy an amplifier that supports Spotify Connect directly or use Bluetooth Audio or buy a streaming device and be done with it. But I’ve been sorta trying to rethink my relationship with my phone and get some of my attention span back – and you still have to unlock and use a phone to start the music with these Spotify Connect systems. I don’t want to have to look at my notifications or do Face ID or potentially get sucked into an email / social media hole just to put music on.

So I decided to build something. After a couple weeks, I’d gotten a piece of open-source software called librespot to stream over the stereo’s USB-audio connection from a Raspberry Pi. This works great1 – but I still needed an interface.

I wanted:

- to be able to play music without having to look at my phone (this was the whole goal of the project)

- to be able to change the volume from my sofa. And pause the current song, and skip tracks…

What I wanted was a 💡 remote control. Something that doesn’t require line-of-sight because I’ve got that Raspberry Pi in a cupboard somewhere. But the Raspberry Pi is just a Linux computer… so maybe I can just use a bluetooth keyboard? Like a Macropad?

Spoilers, here is the remote control

Probably the coolest remote control ever

This is an Owlab Voice Mini and a bit of custom software running on the computer.

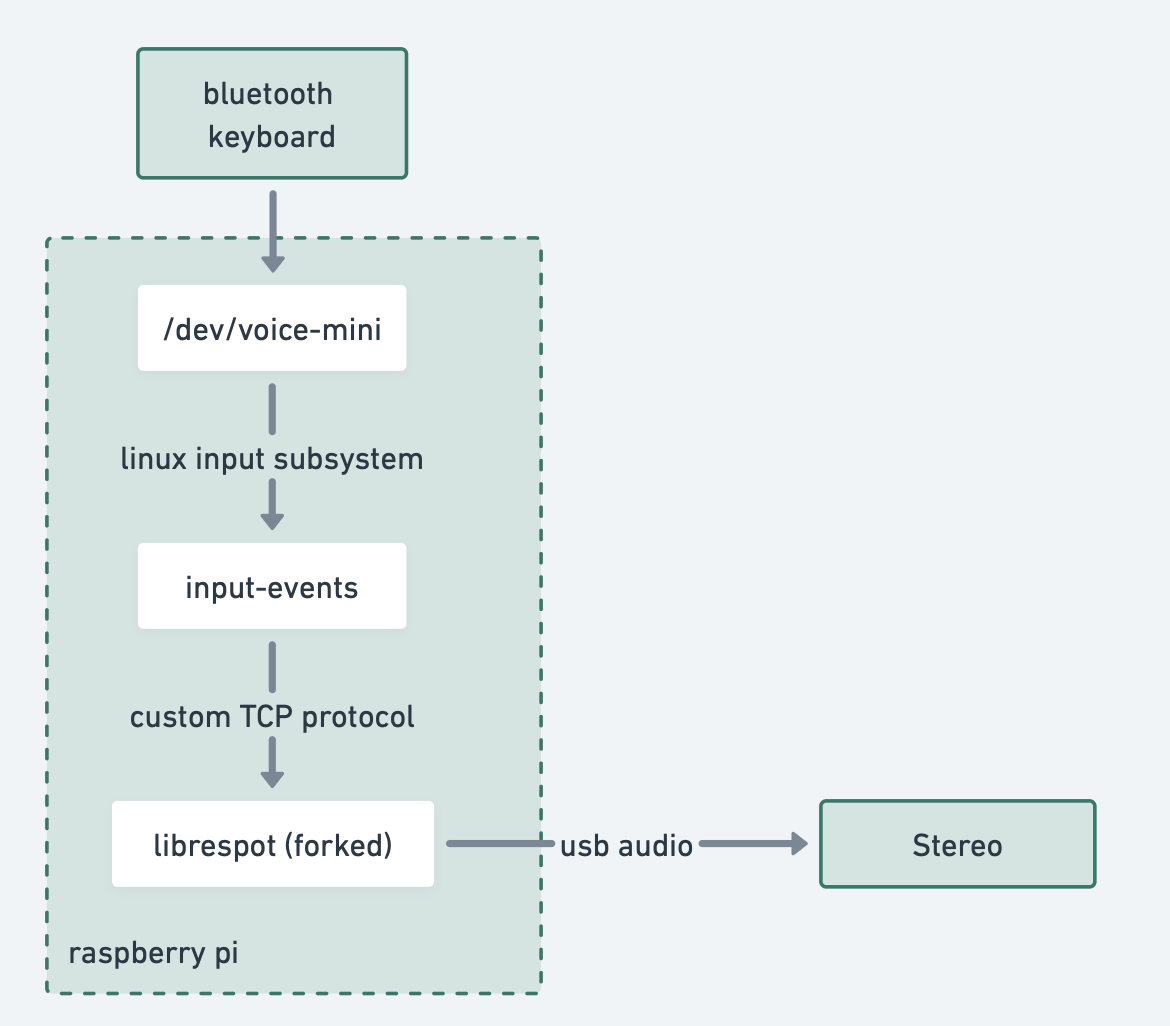

Here’s how it works:

- the top row of buttons are ⏮️ Prev | ⏯️ Play/Pause | ⏭️ Next.

- The big ISO Enter button is also Play/Pause. It makes a big satisfying “click” sound when you slap it.

- That little brown knob on the top right (behind the enter key) changes the volume.

But! It also supports playing albums. The awkward list icon button (middle-bottom, I can’t draw good) in combination with any of the other keys randomly picks an album matched to an ✨ energy ✨:

- Chill: think: easy listening. Sigur Ros. The Staves. Mogwai. The National.

- Fun. think: Frances Quinlan, Vampire Weekend, Ezra Collective, Royal Canoe2.

- RUDE: Queen, Tune-Yards, Fall of Troy, Battles, and (since last week after watching that Tiny Desk concert that’s doing the rounds) Doechii.

I love this playlist feature. I can get home, turn on the stereo (it’s got a super satisfying big button which makes a kchunk sound and everything lights up) and then slap a really satisfying ultra clicky mechanical keyboard switch and have the music start.

The system isn’t perfect –

- there’s no screen, and therefore no immediate feedback for your actions. I sometimes have to wait for the song to really start before I can figure out if it’s something I want to listen to.

- The bluetooth keyboard won’t wake up from twiddling the volume knob, so you’ve gotta tap the fn key to get it to wake up first.

- It’s also probably the remote control with the worst battery life imaginable – about three days – and the on/off switch is literally underneath the keys.

WHYYYYYYYYYYYY

But I love it. I can leave my phone on charge and muted and kinda just roll the dice through some of my favourite albums until I get to something I wanna listen to.

Here’s how it works

Oh goodness I think this is the first time i’ve put a block diagram into a blog post:

ok it’s not actually that complex

We’ll talk about all these pieces one-by-one.

Building the macropad

Like I said before, the macropad is an Owlab Voice Mini (in Latte). You still need to BYO switches + keycaps.

I think this is a render? img via Candykeys

I added some clicky clicky Cherry MX Green switches and some “relegendable keycaps” that I had lying around (these are super cool, you can draw on a piece of paper and then just slip it into the keycap).3

WIP; ISO Enter keys are weirdly difficult to source

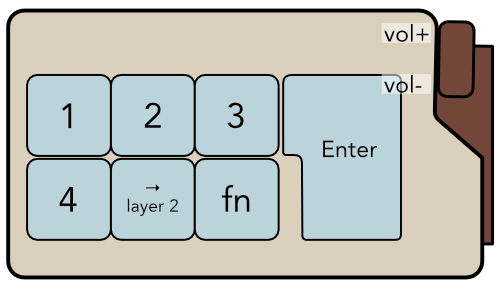

After building - we’ve gotta figure out what all the keys are mapped to, and (maybe) reprogram it. Here’s what I’ve got it set up for:

Layer 1 (normal)

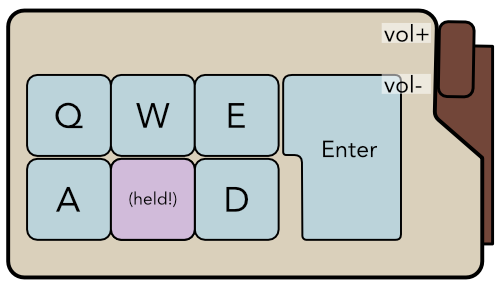

Layer 2 (playlists)

That is: pressing the top row of keys sends 1, 2, and 3 to the computer. Holding the “playlists / layer 2” key and pressing the top row of keys sends Q, W, and E to the computer.

We’ll now teach the computer how to interpret these keystrokes.

Changes to librespot

I added some custom code to librespot which listens on TCP port 2777 and processes text instructions. The protocol is super simple – it supports the following commands:

"PlayPause", "Next", "Prev", "VolUp", "VolDown"

plus a “load-and-play” command, which is Load/ followed by a spotify URL (e.g. spotify:track:4PTG3Z6ehGkBFwjybzWkR8, you can retrieve Spotify URLs using these instructions).

Each of these commands are just strings, so when I was testing it was perfectly sufficient (and extremely satisfying) to SSH into the Raspberry Pi and write:

$ telnet localhost 2227

PlayPause

Next

Next

Prev

VolUp

VolUp

VolUp

VolUp

Every time I pressed Enter, I could hear the music respond to the command. Feels good! ✨

input-events

I built a tiny program called input-events, which:

Reads directly from the keyboard device (

/dev/voice-mini) using the Linux input subsystem4,Translates those keyboard events to one of the above protocol commands using a config file, and

Writes that command to

localhost:2777(the ‘remote control’ port that I built into my librespot fork).

The config file looks something like this (here’s the full config):

{

"Num1": "Prev",

"Num2": "PlayPause",

"Num3": "Next",

"Enter": "PlayPause",

"VolumeUp": "VolUp",

"VolumeDown": "VolDown",

// CHILL

"Q": {

"choose_one": [

// The National: First two pages of Frankenstein

"Load/spotify:album:5Mc6uebYtKnRc5I7bjlNB6",

// The Staves: Good Woman

"Load/spotify:album:66A7X1EqFQEEvuE5Nezqrl",

// The Staves: If I was

"Load/spotify:album:2VxNr0ZeGhWJ8rQNe4d8vS",

//...

],

// FUN

"W": {

"choose_one": [

// fun.

"Load/spotify:album:7m7F7SQ3BXvIpvOgjW51Gp",

// ...

],

// ...

}

And so (for example): pressing 1 will always run “Prev”, but pressing Q will choose and run one of the commands in that block randomly (and therefore, play one randomly selected ‘chill album’).

input-events is a separate program because when I built it, my iteration time for changes to librespot was huge. Decoupling made it much easier to iterate on the keyboard interaction, and that was the bit that I thought I’d have to prototype the most to get something which ‘feels right’.

This turned out to be a good idea – I haven’t touched the input-events program since I wrote it two years ago, even as I’ve had to recompile librespot to keep it up to date with whatever changes Spotify are making on their backend.

Testing an early version of the input-events program

more udev rules and another systemd service

I’ve written about udev before, but it was much easier the second time around. The rules this time:

- Ensure that the Voice Mini bluetooth keyboard always shows up at the same spot (

/dev/voice-mini) - Boot the

input-eventsservice when the device connects and shut it down again when the device disconnects.

Here are the rules:

ACTION=="add", KERNEL=="event[0-9]*", SUBSYSTEM=="input", ATTRS{name}=="Voice Mini", SYMLINK+="voice-mini", RUN+="/usr/bin/su flopbox -c 'systemctl --user start input-events.service'"

ACTION=="remove", ENV{DEVLINKS}=="/dev/voice-mini", RUN+="/usr/bin/su flopbox -c 'systemctl --user stop input-events.service'"

The first rule basically says: when an input device named “Voice Mini” connects, make a symlink for it at /dev/voice-mini and boot the input-events service. The second rule says: when the device mapped to /dev/voice-mini is removed from the system, stop the input-events service.

That’s it!

That’s it! I’m still very pumped about this contraption; I use it daily, and though it was a lot of work I’m glad I put the time in. My takeaways here are:

- Decoupling the pieces of this system was a great idea.

- Low-level Linux input APIs were nowhere near as bad as I thought they’d be.

- It’s pretty rewarding to build bespoke things for your own use like this ✨.

…With the caveat that open-source clients are not officially supported, and so sometimes Spotify just change things on their servers, and I have to go and recompile the latest version of the code to solve spontaneous crashes. But I also observed that happening on my partner’s Sonos for a few weeks until Sonos got the update together, so all in all, I’m going to call the open-source solution a ‘win’. ↩︎

I was eyeing off the KAM Playground set for a while but I kinda like how shitty (and how custom!) my keycaps are, and if anything I’d probably like to make my own. ↩︎

The Raspberry Pi isn’t running a window manager or anything so I don’t have access to a higher-level abstraction 🥲 ↩︎