When working with anything semi-automated, you sometimes run into a situation where the following are true:

- The environment you’re working in has a lot of interlocking pieces, and changing one thing can result in unexpected changes to lots of other things

- You have to change just one thing

- You want to make sure that there’s no weird side effects.

Have you ever added an extra two or three words to a sentence in a word processor, and then found it had follow-on effects that changed the location of all the pictures on the next page? Then you know what I’m talking about!

GRRRRR.

In general, the more complexity that a system handles, the more you have to worry about this. For the most part, the convenience is worth it – going back to our word processing example, it’s much nicer to have to check the location of where all the pictures moved to than throw out the page and start again (as you would on a typewriter) or moving a bunch of tiny metal letter blocks around as you would on a printing press:

Adjusting text on a printing press (Deutche Fotothek, CC-BY-SA-3.0-DE)

Automated Testing for Software

Software development has a reputation for exploding quickly in complexity like this1. Specifically in this field, being able to re-use components is a two-edged sword – it makes development much, much quicker, but unless you’re careful, it means that you might accidentally break one thing when trying to fix another.

The most common way that developers prevent this nowadays is to write tests – essentially, a list of things that you expect your program to do given certain inputs. You get the computer to run these tests automatically every time you make a change, and if they all check out, then you haven’t broken anything (in theory).

Tests primarily achieve two things in Software Development:

- They’re really effective at encoding intention. That is, someone else can read your work and say “the program is supposed to be doing this one thing because that’s what the test script says”.

- They help enforce consistently correct behaviour. If they’re fast and well-automated enough, you can run all of them every time you make a change, which is a way of ensuring that the program’s behaviour doesn’t drift from that documented intention over time.

Good tests, however, are a lot of work to write. If you’re willing to drop the first goal, though – don’t bother writing down what the code’s supposed to be doing, just provide a way of verifying that it hasn’t changed – then there’s another approach you can take that still gives you a lot of the benefit.

Just diff it

“Diffing” is asking a computer to track or compute the differences between an old version of something and a new version of something. When you diff something, you can save your attention for just the things that are actually changing, which makes working in big, complex works a much simpler process.

Consider: if someone edits your 90-page undergraduate thesis and you need to read through the whole thing manually to see what’s changed, it’s going to be a pain. If someone just marks it up with red pen (or Track Changes in Microsoft Word), then it’s easy to spot and zoom in on the specific edits. The more granular this change highlighting is, the better – it’s always possible to look into the surrounding context to see what’s going on, but it’s hard to find a needle in a haystack.

Tracking Changes in Microsoft Word

Computer programmers have been using “diffs” to communicate the changes they’ve made / would like to make to code since the 70s. The major difference between a “diff” and “track changes” is that a diff is generated by comparing two separate files, whereas track changes requires following all changes to a file as they’re happening. Tracking changes is therefore simpler – you “just” have to track what’s happening, not try and reverse-engineer what changed – but they’re less useful if you need to e.g. compare two documents from different sources, or forgot to turn on tracking before you started making changes. Diffs are more flexible, in that it’s possible to obtain documents from separate sources, and compute the similarity retroactively.

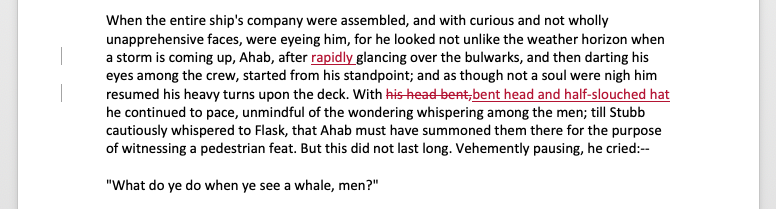

Diffing changes from the last saved draft of this article. This is a newer version of that same ‘diff’ program, initially released in the 1970s.

This flexibility of diffs lends itself to it being a useful strategy for ensuring that things haven’t changed in the absence of proper behavioural tests.

How do you implement diffs as change canaries?

- Build your process / system / app.

- Grab some sample input from somewhere. Ensure its representative of what your system will be processing every day!

- Run it through your program.

- Manually verify that the output “looks right”, and save that output somewhere.

- Now, every time you modify something somewhere in your code, run the same output through the program again, and check that the output is the same using a diff tool. If something has changed, then either:

- you were expecting it – Verify that the new output “looks right” and save it as the “canonical version”.

- you weren’t expecting it – figure out what went wrong and try again.

- If you’re writing computer software and have the ability to automatically run tests on every change, you can run this diff as part of your automated test suite2.

Maximally effective diffing

Highly-granular diffing, thanks to good tooling

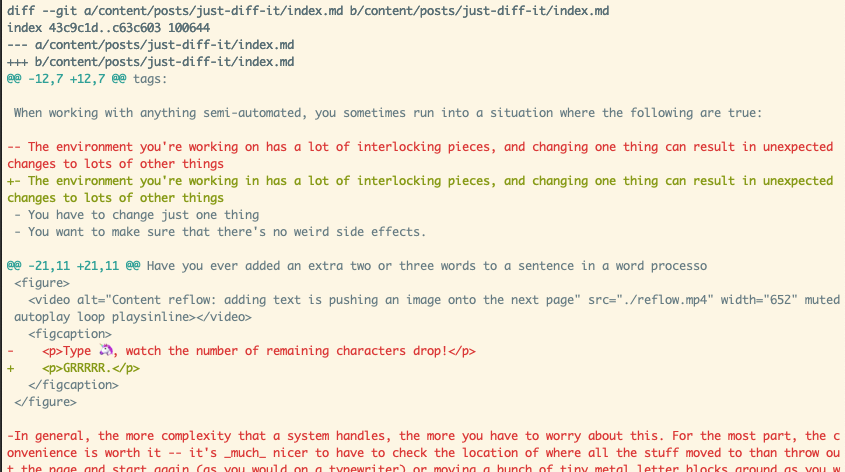

Diffing attempts to retroactively compute changes that get made to files, so it works best when the file format is well understood, and especially, when it’s well understood how it gets modified in response to edits. As such, diffing works best when your diff tool is explicitly designed for handling the specific type of file you’re attempting to diff. There’s extremely good support for diffing text files, so if your data can be represented as text, it will probably be ok. For images and binary / compressed / encrypted data, you’ll be able to tell that the content has changed, but not how, unless your diff tool explicitly understands the format3.

This is still really useful for detecting unexpected changes, but it’s less useful for isolating a problem if something did change, because your diff results are less granular.

Yep, super helpful, thanks

To conceptualise this better, let’s go back to our word-processing example. It’s not particularly useful to be told “something in this book is different, but I don’t know what”. It’s more useful to be told “something in Chapter 10 has changed” and still more useful to be told “I changed the third sentence on page 83”. When your diff tool fits your content type well, you get diffs mentioning the software equivalent of “the third sentence on page 83”; when your diff tool fits your content type badly, it’s more like “something in Chapter 10 has changed”.

The importance of pure functions

Additionally, diffing works best for pure functions.

Pure Functions are a math concept – the basic idea is that the only thing that’s allowed to affect the output of a function is the input. It shouldn’t matter if you run the function at day, at night, once, fifty times, after installing everything on your computer again from scratch, today, or in 50 years. So long as the input data is the same, the output data should be too.

The most common issue when diffing things is that sometimes an external factor like the current time or a random number generator affects the output somehow. When this happens, you’ve got two choices:

Try and anchor these external factors and keep them constant every time you perform the diff. This is hard for things like the current time, but is easy for things like the operating system that you’re running on, if that were to affect your process output

Make these impure functions into pure functions by converting these external factors into explicit inputs. Once they’re explicit inputs, they can be controlled, and then they become part of your sample input data set.

If your system isn’t a “pure function”, you’ll still be able to compute a diff, but you’ll notice that little bits and pieces always seem to be changing, unrelated to the changes that you’ve made. This is usually workable if your tools support highly-granular diffing – you’ll probably just get good at spotting and ignoring the bits that have changed because the environment has changed. If your tools don’t offer particularly granular diffs, or if the process mixes input and environment data, then interpreting diffs is going to be much more frustrating and error prone, and it’s especially worth investing in making your system under test into a pure function.

Wait a second, all of this sounds suspiciously similar to writing good tests…

Uh, yeah – you’re right. 😅

As I got further and further through this piece I realised I was having a hard time drawing a line between the two. I guess both diffs and automated tests exist on the same spectrum, and maybe it’s not super helpful to try and draw this line between the two? But I’ve found it helpful to consider this angle in my work, and as I try to deliver and maintain work with an emphasis on reliability. Hopefully there’s been something helpful in here for you, too.

Actually, I guess all design and engineering projects have a reputation for this kind of complexity – but I’ll stick to what I know here. ↩︎

We do this at Sendwave for the outputs of many of our web service responses. It works well for us because we don’t have anything explicitly defining what our HTTP response should look like – we just return big blobs of JSON to clients, and don’t have any explicit schema or service definitions. The “canonical version” that’s saved as part of our automated test suite ends up becoming the service definition, in a way. ↩︎

Specifically with regards to images: it’s usually not a big problem that many diff tools can’t handle them, because the human eye is really good at spotting differences between images. It’s usually sufficient to open both images and compare them side by side. ↩︎