I’m picking up a project again which, for a complicated set of reasons, requires me to make changes to a large Rust application which is going to run on the Raspberry Pi.

There’s just one problem:

- The Rust compiler requires a lot of RAM and CPU

- My Raspberry Pi (model 3+) has exactly 1GB of RAM and a very small CPU1.

But hey, let’s give it a shot anyway. How bad can it be?

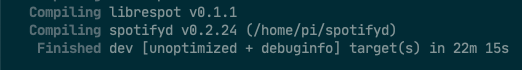

… 22 minutes. That’s how bad.

I decided to try and build the code on a much faster computer instead, and then copy the produced binary onto the Raspberry Pi.

Cross-compiling pure Rust is really easy

Compiling code from one computer for another computer is called cross-compiling, and it’s historically been horrendous. Modern Rust makes it mostly not horrendous; you can cross-compile a hello-world program in ~5 shell commands:

# Make sure GCC's linker for the target platform is installed on your

# system

apt install gcc-arm-linux-gnueabihf

# Install the standard library for the target platform

rustup target add armv7-unknown-linux-gnueabihf

# Create a hello-world program

cargo new helloworld-rust && cd helloworld-rust

# Tell cargo to use the linker you just installed rather than the default

export CARGO_TARGET_ARMV7_UNKNOWN_LINUX_GNUEABIHF_LINKER=/usr/bin/arm-linux-gnueabihf-gcc

# Build!

cargo build --target=armv7-unknown-linux-gnueabihf

Even better, the excellent people from the Rust-Embedded Working Group have released a tool called cross which abstracts a lot of the setup into Docker containers, so, assuming you’ve got Docker installed, you can just write:

cargo install cross

# Just replace the word 'cargo' with the word 'cross' in your

# build command

cross build --target=armv7-unknown-linux-gnueabihf

This is a huge improvement on the former state of the art – just install two programs, and run the build command! But the simplicity starts to fall apart once you need to link external libraries – those that weren’t written in Rust and are (usually) distributed using a mechanism other than Rust’s cargo package manager. Just because it’s fiddly, though, doesn’t mean it’s not tractable. In this post, we’re going to make it work for a pretty simple project.

Let’s get started!

Let’s talk about external libraries in Rust

There are lots of great libraries that have been written in languages which aren’t Rust. If we want to use them from our Rust code, we need to interoperate over a Foreign Function Interface – often referred to as FFI Interop3. The Rust ecosystem has a really nice convention for crates that do this:

- Make a crate named

foo-sys, which defines the FFI for thefoo(non-Rust) system library. This is the ‘minimum viable’ binding to the library from Rust - it should basically attempt to map C functions directly to Rust and not translate anything to Rust types or paradigms.4

- Then, wrap the functionality from

foo-sysin Rust-ier code, i.e. build a translation layer from the low-level, unsafe Rust bindings to something that’s more idiomatic, and doesn’t require unsafe blocks everywhere.

For us, this is relevant because:

- Getting all the

*-syscrates to build is the hard part of what we’re doing in this blog post - The fact that they all have the same suffix means we can use

cargo tree --prefix none | grep -- '-sys 'to enumerate them all ✨

Enumerating the dependencies

Throughout this article, we’re going to be working with spotifyd, an open-source background service that streams from Spotify.

Running cargo tree --target armv7-unknown-linux-gnueabihf --prefix none | grep -- '-sys ' | sort shows the *-sys dependencies which we’re going to need to link:

alsa-sys v0.1.2

alsa-sys v0.1.2 (*)

backtrace-sys v0.1.32

backtrace-sys v0.1.32

ogg-sys v0.0.9

openssl-sys v0.9.53

openssl-sys v0.9.53 (*)

Some of these dependencies link the external library statically, some dynamically, and one (openssl-sys) gives you the choice 😅

To figure out what packages you’ll need on your system to support the build, your options are:

- Reading the docs / build / CI scripts for the project you’re building

- Reading the docs for the crates or the

build.rsscripts in their repos - Compiling and fixing the errors as they crop up.

Spotifyd’s README indicated that I’d need a system with alsa (aka libasound2-dev) and openssl (aka libssl-dev) available as dynamic libraries5 (the other two that we found with cargo tree – backtrace-sys and ogg-sys – link statically).

So, it’s time to make some changes to our Docker container.

Installing libraries for the target architecture

Now, we can theoretically tell the Ubuntu install on the Docker container to “enable the Raspberry Pi architecture (armhf) and install the required packages”:

# Use the container that comes with `cross` as a base. It's already got

# a cross-compile toolchain installed, so that's less work for us.

FROM rustembedded/cross:armv7-unknown-linux-gnueabihf-0.2.1

RUN apt-get update

RUN dpkg --add-architecture armhf && \

apt-get update && \

apt-get install --assume-yes libssl-dev:armhf libasound2-dev:armhf

Now build and tag the container:

# The name / version here are arbitrary

docker build -t crossbuild:local .

… and then tell cross to use that image for the armv7-unknown-linux-gnueabihf platform by making a Cross.toml like this:

[target.armv7-unknown-linux-gnueabihf]

# The tag name from the `docker build` command

image = "crossbuild:local"

Let’s try building:

cross build --target=armv7-unknown-linux-gnueabihf

...

error: failed to run custom build command for `alsa-sys v0.1.2`

Caused by:

process didn't exit successfully: `/target/debug/build/alsa-sys-890a4e720127b72d/build-script-build` (exit code: 101)

--- stderr

thread 'main' panicked at 'called `Result::unwrap()` on an `Err` value: "`\"pkg-config\" \"--libs\" \"--cflags\" \"alsa\"` did not exit successfully: exit code: 1\n--- stderr\nPackage alsa was not found in the pkg-config search path.\nPerhaps you should add the directory containing `alsa.pc\'\nto the PKG_CONFIG_PATH environment variable\nNo package \'alsa\' found\n"', /cargo/registry/src/github.com-1ecc6299db9ec823/alsa-sys-0.1.2/build.rs:4:38

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

Ok, we’ve got a big error message hidden in the output there. Here it is, reformatted for your convenience:

Package

alsawas not found in thepkg-configsearch path.Perhaps you should add the directory containing

'alsa.pc'to thePKG_CONFIG_PATHenvironment variable.

It looks like the alsa-sys crate is using pkg-config to resolve dependencies. I’ve never even heard of pkg-config before, so let’s figure out what that is about.

(Re-)configuring pkg-config

On Linux, a good first step for unfamiliar programs is to consult the manual:

$ man pkg-config

...

DESCRIPTION

The pkg-config program is used to retrieve information about

installed libraries in the system.

It is typically used to compile and link against one or more

libraries. Here is a typical usage scenario in a Makefile:

program: program.c

cc program.c $(pkg-config --cflags --libs gnomeui)

pkg-config retrieves information about packages from special

metadata files. These files are named after the package, and

has a .pc extension. On most systems, pkg-config looks in

/usr/lib/pkgconfig, /usr/share/pkgconfig, /usr/local/lib/

pkgconfig and /usr/local/share/pkgconfig for these files.

It will additionally look in the colon-separated (on Windows,

semicolon-separated) list of directories specified by the

PKG_CONFIG_PATH environment variable.

Oh, that’s helpful! Now that we’ve got more context, it seems sensible to do what that error message asked us to.

First, let’s fire up a shell inside our docker container:

docker run -it crossbuild:local bash

…and find that alsa.pc file that the error message asked for:

root@51890f6164b9:/# find / -name alsa.pc

/usr/lib/arm-linux-gnueabihf/pkgconfig/alsa.pc

Theoretically we could just do exactly what the error message says, and set PKG_CONFIG_PATH=/usr/lib/arm-linux-gnueabihf/pkgconfig. But, I have four days of hard-won experience now, and I’m going to jump ahead and say don’t do that, Dear Reader; you’re potentially making things more confusing for yourself in the future.

What you’re apparently supposed to do on multi-architecture debian-based systems is use arm-linux-gnueabihf-pkg-config instead of pkg-config. If we just set PKG_CONFIG_PATH, we’re telling the regular pkg-config (which finds dependencies for your host system) to also find dependencies designed for your target system, which is going to result in mayhem at link time when you’re building dependencies for the host system6.

Now, arm-linux-gnueabihf-pkg-config is just a symlink…

ls -l `which arm-linux-gnueabihf-pkg-config`

lrwxrwxrwx 1 root root 34 Oct 21 16:53 /usr/bin/arm-linux-gnueabihf-pkg-config -> /usr/share/pkg-config-crosswrapper*

…which links to a shell script you can read! The short version is that it sets the PKG_CONFIG_LIBDIR environment variable and then invokes pkg-config.

What’s the difference between PKG_CONFIG_LIBDIR and PKG_CONFIG_PATH? According to Cross Compiling With pkg-config:

Note that when specifying

PKG_CONFIG_LIBDIR,pkg-configwill completely ignore the content inPKG_CONFIG_PATH

This sounds closer to what we want, because it means we’re not mixing our architectures. We could definitely still get into a situation where pkg-config chooses target dependencies for host builds, but at least it will happen more… consistently now? Maybe??

Fortunately, the creators of the pkg-config crate anticipated this problem, and have a solution: they allow you to set target-scoped PKG_CONFIG_* environment variables. In short, this means that, rather than use PKG_CONFIG_LIBDIR, we can use PKG_CONFIG_LIBDIR_[target] and the pkg-config crate will take care of rewriting it to PKG_CONFIG_LIBDIR whenever we’re building for that [target]. Amazing!

Let’s add a target-scoped version of PKG_CONFIG_LIBDIR to our container. Our Dockerfile now looks like this:

FROM rustembedded/cross:armv7-unknown-linux-gnueabihf-0.2.1

RUN apt-get update

RUN dpkg --add-architecture armhf && \

apt-get update && \

apt-get install --assume-yes libssl-dev:armhf libasound2-dev:armhf

# New!

ENV PKG_CONFIG_LIBDIR_armv7_unknown_linux_gnueabihf=/usr/lib/arm-linux-gnueabihf/pkgconfig

Rebuild the docker container, and rerun cross:

docker build -t crossbuild:local .

cross build --target=armv7-unknown-linux-gnueabihf

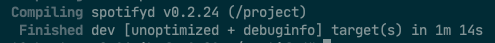

And this time it works.

Yesssssssss 🥳

Now copy it across to the Raspberry Pi and run it:

$ ./spotifyd --version

spotifyd 0.2.24

And we’re done. 🎉

That’s it! Or is it?

We’ve made a lot of progress on a cross-compile setup in this post! If the approach here works for you7 then I strongly recommend sticking to it. For me, it turned out that this only worked due to sneaky, arcane reasons, which I discovered when I decided I also wanted to build the dbus_mpris feature. Stick around for part 2, where I trial and error more shit until I get a system that works in more esoteric circumstances. ✨

Faster than I expected though! It’s a 64-bit 1.2GHz quad-core, which is crazy good for the price. ↩︎

I managed to save the one-minute startup time (!) by keeping

cross’s docker container around and then invoking the build command inside it, rather than restarting the container on every invocation, and then saved another 4 minutes per build by messing around with the way thatcrossmounts OSX volumes. That’s still 17 minutes for a clean build, though. ↩︎It’s sometimes also called C-interop, because regardless of the languages the two pieces of compiled code were written in, they’re usually bridged together using function definitions written in C. ↩︎

Making a *-sys crate is a great high-level guide to the nuances involved here. ↩︎

The readme also lists

dbusandpulseaudio, which are required for optional features. We’ll eventually wantdbusso we can turn on thedbus_mprisfeature, but in the interest of incrementalism, let’s add that later. ↩︎It might seem unlikely that both the host and target systems would build / link the same external code, but it’s really, really not! When cross-compiling

spotifyd, for example, thebacktrace-syscrate is compiled both for the host architecture (it’s used in a rust program to compile some other non-rust files) and for the target architecture. I got burned after settingCPATHfor the entire build when the build forbacktrace-sysstarted includingarmv7hfheaders for anx86_64compile target. ↩︎It does for a lot of people! And this is in fact what the cross documentation suggests as a default. ↩︎